Section A - Credibility Weighted Estimates Flashcards

Estimation of Policy Liabilities

Mack (2000): What is the Benktander method? Describe the method and the associated formula for the second iteration of the BF method.

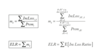

- Benktander method derives the ultimate loss by credbility-weighting the chain ladder and expected loss ultimates

- Iteration 1:

UltBF = Loss + (1 - %Paid) x Prem x ELR

UBF = Ck + qkU0

- Iteration 2:

UGB = Loss + (1 - %Paid) x UltBF

UGB = Ck + qkUBF

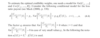

Mack (2000): Benktander as a Credibility-Weighting of the Chain Ladder & Expected Loss Ultimates

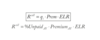

UGB = ?

Chain Ladder Ultimate = Loss x CDF

UCL = Ck ÷ pk

Benktander:

qk = 1 - (CDF)-1

UGB = (1 - qk2)UCL + qk2U0

UltimateGB = (1 - %Unpaid2)xUltCL + %Unpaid2 x Prem x ELR

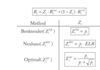

Mack (2000): Benktander as a Credibility-Weighting of the Chain Ladder & BF Reserves:

RGB = ?

ReserveGB = (1 - %Unpaid) x ReserveCL + %Unpaid x ReserveBF

RGB = (1 - qk) x RCL + qk x RBF

- Esa Hovinen Reserve = REH = cRCL + (1-c)RBF

- when c = pk then REH = RGB

Mack (2000): What happens when we iterate between reserves and ultimates indefinitely?

- As the number of iterations increases, the weight on the chain ladder method increases until it converges to the chain ladder method entirely

Note: We are iterating the BF method to infinity where q(∞) goes to zero so 100% weight on CL method

Mack (2000): What are the advantages of the Benktander method?

- Lower MSE

- Better approximation of the exact Bayesian procedure

- Superior to chain ladder since more weight is given to the priori expectation of ultimate losses

- Superior to BF method since it gives more weight to actual loss experience

Mack (2000): When referring to the chain ladder method, what average are you using to calculate the age-to-age factor?

- Always use the all year volume weighted average

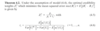

Mack (2000): Given the following information for AY 2012 at 12 months, which reserve has the smaller MSE?

c* = 0.32

Ck = $3,000

UCL = $5,000

- Neuhaus showed that MSE of RGB is almost as small as the optimal credibility reserve, c*, unless pk is small at the same time c* is large

-

RGB has a smaller MSE than RBF when c*>pk/2 holds

- makes sense as c* is closer to c=pk than it is to zero

- 0.32>0.3 so RGB has smaller MSE

Hurlimann: What is the main difference between Mack (2000) and Hurlimann estimate of claim reserves?

- Hurlimann uses expected incremental loss ratios (mk) to specify the payment pattern rather than LDFs from actual losses

- Hurlimann uses two reserving methods:

- Individual Loss Ratio Reserve (Rind) - CL Method

- Collective Loss Ratio Reserve (Rcoll) - BF method

-

Key Idea: Rind and Rcoll represent extremes of credibility on the actual loss experience so that we can calculate a credibility-weighted estimate that will minimize the MSE of the reserve estimate

- provides an optimal credibility weight for combining the CL or individual loss ratio reserve with the BF or collective loss ratio reserve

Hurlimann: Describe the notation that Hurlimann uses.

pi = ?

qi = ?

UiBC = ?

Uicoll = ?

Uiind = ?

Ui(m) = ?

….

pi = loss ratio payout factor (loss ratio lag factor); proportion of total ultimate claims from origin period i expected to be paid in development period n-i+1

qi = 1 - pi = loss ratio reserve factor

UiBC = Ui(0) = burning cost of total ultimate claims from origin period i

Uicoll = Ui(1) = collective total ultimate claims from origin period i

Uiind = Ui(∞) = individual total ultimate of claims for origin period i

Ui(m) = ultimate claim estimate at the mth iteration for origin period i

Ricoll = collective loss ratio claims reserve for origin period i

Riind = individual loss ratio claims reserve for origin period i

Ric = credible loss ratio claims reserve

RiGB = Benktander loss ratio claims reserve

RiWN = Neuhaus loss ratio claims reserve

Ri = ith period claims reserve for origin period i

R = total claims reserve

mk = expected loss ratio in development period k (incremental)

n = number of origin periods

Vi = premium in origin period i

Sik = paid claims from origin period i as of k years development where 1≤i, k≤n

Cik = cumulative paid claims from origin period i as of k years of development

Hurlimann: State the formulas for the following:

Total Ultimate Claims =

Cumulative Paid Claims =

i-th Period Claims Reserve =

Total Claims Reserve =

Total Ultimate Claims = Σk=in Sik

Cumulative Paid Claims = Cik = ΣSij (sum over j = 1, 2, …,k)

i-th Period Claims Reserve = Ri =Σ Sik (sum over k = n-i+2, ….., n)

Total Claims Reserve = R = ΣRi (sum over i=2, …., n)

Hulimann: Expected Loss Ratio

mk =

ELR =

Hurlimann: What are the forumlas for the loss ratio payout factor and the loss reserve factor?

- different from Mack (2000) as this paper uses the loss ratios for pi not the LDF’s

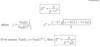

Hurlimann: What are the formulas for the individual loss ratio claims estimate?

Hurlimann: What are the formulas for the collective loss ratio claims estimate?

Hurlimann: What are the crediblity-weighted loss ratio claim reserve formulas for Banktender, Neuhaus and Optimal?

Hurlimann: Formulas for Optimal Credibility Weights (Simplified)

- When f=1, we get the simplified version of topt = √p

- the optimal credibility weight, zopt, is the weight that minimizes the MSE between the actual reserve and the credibility reserve

Hurlimann: What is the basic form of tiopt?

tiopt = E[⍺i2(Ui)] / (var(UiBC) + var(Ui) - E[⍺i2(Ui)])

- this is the generalized version that is not used often

- Hurlimann starts off with this form and eventually gets to the ‘f’ form by using distributions to estimate the parameters in the above equation.

Hurlimann: What is an advantage of the collective loss ratio claims reserve over the traditional BF reserve?

- Different actuaries come to the same result if the same premiums are used, because judgement isn’t used to select the ELR.

Hurlimann: How do the collective and individual loss ratio claims reserve estimates represent two extremes?

Rind - 100% credibility is placed on the cumulative paid claims (Ci) and ignores the burning cost estimate (UBC)

Rcoll - places 100% credibility on the burning cost and nothing on the cumulative paid losses

Hurlimann: The mean squared error for the credible loss ratio reserve is given by:

mse(Ric) =

- For collective loss ratio MSE, set Z=0

- For individual loss ratio MSE set Z = 1

Hurlimann: Under the following assumption, what are the optimal credibility weights (Z*) that would minimize the MSE of the optimal reserve (Ric)?

Assumption:

- Basic Form

Hurlimann: Under the assumption 4.4 (conditional for loss ratio payout) in the previous slide, what are the MSE for the following:

mse(Ricoll) = ?

mse(Riind) = ?

mse(Ric) = ?

mse(Ricoll) = E[⍺i2(Ui)] * qi*(1 + qi/ti)

mse(Riind) = E[⍺i2(Ui)] * qi/pi

mse(Ric) = E[⍺i2(Ui)] * [Zi2/pi + 1/qi + (1-Zi)2/ti] * qi2 (basic method - can be used for all methods)

Hurlimann: How would you estimate reserves for the Optimal Cape Cod Method?

- Use the loss ratio to get ultimate for Rcoll and to get pk use LDFs

- LR = ΣCi, n-i+1 / ΣpiCLVi

- the loss ratio takes the total paid losses to date and divides the losses by the earned premium to date which means premium is adjusted to be aligned with losses seen to date

- Formulas are the same for Rind, Rcoll, and Z using t1/2

- The credibility weighted reserve is not the Cape Cod method. It’s a weighted method between the chain ladder and cape cod.

Hurlimann: How would you estimate reserves for the Optimal BF Method?

- Replace the loss ratio that Hurlimann uses to get pk with the CDF

- LRi =should be given to you as this is some selected initial loss ratio for an origin period

- pk is derived using LDFs

- Formulas are the same for Rind, Rcoll, and Z using t = √p

- if not optimal, then Z = p

- the BF method is a Benktander-type credibility mixture where the loss ratio is pre-selected

- in the Cape Cod method, the loss ratio is derived using all years

- the credibility mixture does not equal the BF method; the collective reserves are equal to the standard BF reserves which is weighted with the chain ladder reserves.

Hurlimann: Briefly describe 3 differences between Hurlimann’s method and the Benktander method.

- Hurlimann’s method is based on a full development triangle; whereas, the Benktander method is based on a single accident year

- Hurlimann’s method requires a measure of exposure (e.g. premiums) for each accident year

- Hurlimann’s method relies on loss ratios rather than link ratios to determine reserves